Advances in temperature calibration procedures

Recent developments eliminate the need for unnecessary calibrations and speed up the time it takes to do calibrations in the field.

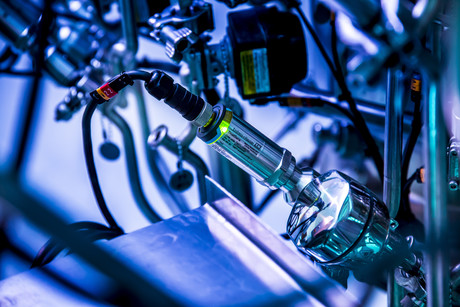

Critical processes in the pharmaceutical and biosciences industries often require frequent calibration of temperature instrumentation. Calibration typically requires shutting down a process every six months or so to remove and replace an instrument (Figure 1), then taking the instrument to a lab where it may prove to be calibrated within specs.

Recent developments in temperature sensor technology now make it possible for a sensor to determine if it actually needs calibration, thus eliminating unnecessary lab calibrations. When a sensor does need calibration, other new developments cut the time needed for a calibration in half.

In this article, we’ll look at the need for frequent calibrations in the life sciences industry, what’s involved, and how sensor technology is making calibrations easier and less expensive.

Calibrate we must!

Recently, quality risk management (QRM) has become a mandatory regulatory requirement for drug manufacturers. The US Food and Drug Administration (FDA) and the European Medicines Agency (EMA) publish guidelines and requirements which customers and vendors are expected to follow. Guidelines such as ‘Process Validation: General Principles and Practices’ by the FDA and Annex 15 issued by the EMA offer input to help drug manufacturers design processes correctly.

Also, ISO9001:2008-7.6, GMP and WHO regulations and standards all require equipment and instrumentation to be calibrated or verified at specific intervals against measurement standards traceable to international or national standards. The main issue with scheduled calibration cycles is the performance of instruments between calibrations.

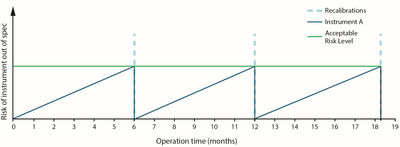

The blue line in Figure 2 shows how an instrument can deviate over time because of sensor drift, ageing and other factors before being recalibrated back to initial specifications every six months. The deviation between calibrations must always remain below the acceptable level. However, the possibility for an undetected ‘out of spec’ situation gradually increases over time, resulting in an increased risk of product quality issues.

If a temperature sensor goes out of spec before the next calibration, difficult questions will have to be addressed:

- When did it go out of spec?

- How many batches have been affected since then?

- Do the products made from those batches have to be recalled?

Ideally temperature sensors should be calibrated after each batch, but the costs of doing unnecessary calibrations include labour cost and lost production, and a certain amount of risk is involved in handling and perhaps damaging the instrument. In most cases, the calibration cycle is a statistical calculation based upon the risk of excessive drift of the sensor occurring versus the cost of conducting manual calibrations.

The batch nature of bioscience processes — where batches can run for days or weeks — doesn’t really lend itself to such an approach. Fermentation is a good example.

Fermenting processes

Fermentation is used to cultivate cell cultures. The cultures consume a nutrient solution, multiply, and create the desired product. Fermentation typically takes place in a series of bioreactors. Bioreactors are usually made of 316L stainless steel and are jacketed to either cool or heat as required by the reaction.

The cell culture to be grown is placed in the bioreactor, which is filled with a nutrient solution. The nutrient solution varies based on the specific cell culture and desired product but typically consists of glucose, glutamine, hormones and other growth factors. Agitator blades make sure that the solution and cell culture mix thoroughly to promote efficient growth within the vessel. The bioreactors are connected in series, with each successive vessel larger than the previous to contain the increasing cell mass.

The product that results from the reactions can be anything from antibiotics to vaccines and other cell-based products. Typical waste products of the reactions are CO2, ammonium and lactate. After the nutrient solution is consumed, the reaction is complete and the desired yield has been achieved, the solution moves to the harvesting process, where the product is separated from the dead cells and other waste products.

Fermentation batches essentially are broken up into several phases: sterile media preparation, fermentation, harvest, cleaning in place (CIP), final rinse and sterilisation in place (SIP). There are three common types of fermentation batch processes — single batch, intermittent harvest/fed batch and continuous batch:

- A single-batch fermentation process runs until there are no more nutrients left for culture to consume. A typical run is 7–14 days.

- Intermittent harvest batch runs are similar to a single batch except as nutrients are depleted and product is harvested, fresh nutrient solution is added to allow for longer batch cycles. A typical run is 2–3 weeks. Similarly, a fed-batch process adds nutrients and additional feed solutions, but puts off harvest until the end of the batch cycle. Vaccine production is typically intermittent harvest, while protein production is typically fed batch.

- A continuous batch continually adds nutrients and harvests product and waste with a cell retention device, resulting in higher production concentration. This type of batch is especially used for labile processes such as stem cell production. Nutrients and new cell culture are continuously added and harvesting is done without shutting down the process. The process is only brought down for maintenance, cleaning and sterilisation of the vessel or for calibration.

With batches lasting for several weeks — or even months — an undetected out-of-spec temperature sensor could ruin the entire batch, at the cost of several million dollars’ worth of spoiled product.

That’s because temperature is usually the most important measured parameter in fermentation processes. It’s used to optimise growth and productivity and to monitor conditions in the vessel. Temperature is important in maintaining the solubility of the media as well as providing stable conditions for the produced protein. Fermenters usually have multiple sensors (Figure 3) monitoring temperature at various levels within the solution to maintain uniform temperature. Temperature sensors are also in the vessel jacketing to maintain adequate heating or cooling of the vessel.

Cleaning up

It’s important that unwanted biological elements (eg, foreign bacteria) do not grow in vessels. Contamination of tanks can result in lost batches or even complete tear down and rebuild of the vessels. To this end, fermentation tanks are typically cleaned and sterilised between each batch with CIP and SIP procedures.

CIP is a cleaning process that consists of injecting hot water and introducing a base to neutralise acids, followed by another injection of hot water. Once done, the entire vessel is rinsed with water. SIP is a sterilisation process that consists of injecting steam into the vessel and holding the temperature at around 121°C for up to an hour.

CIP is commonly used for cleaning bioreactors, fermenters, mix vessels and other equipment used in biotech, pharmaceutical and food and beverage manufacturing. CIP is performed to remove or obliterate previous cell culture batch components. It removes in-process residues and control bioburden, and reduces endotoxin levels within processing equipment and systems. This is accomplished during CIP with a combination of heat, chemical action and turbulent flow to remove mineral precipitates and protein residues. Caustic solution (base) is the main cleaning solution, applied in single pass, with recirculation through the bioreactor followed by WFI (water for injection) or PW (pure water) rinse. Acid solution wash is used to remove mineral precipitates and protein residues.

Calibration

Three-wire, platinum 100Ω resistance temperature detectors (RTDs) are the most common sensor type used. High accuracy and fast response are very important for temperature measurement in fermenters, so sensors are regularly calibrated to maintain measurement accuracy. Sensors within a vessel are also compared to each other to monitor potential sensor drift.

Unlike CIP/SIP processes, calibration of sensors is not always performed between batch runs. One reason for this is that calibration is time-consuming and requires the entire process to be offline, resulting in less production. This results in trade-offs in measurement accuracy and reliability that could cause unacceptable levels of uncertainty to creep into the process between calibrations. Care must be taken by plant reliability engineers to balance these trade-offs when considering a calibration schedule.

Process reliability engineers must give careful thought and analysis when setting calibration frequencies. Calibrating too often results in unacceptable production reductions, while calibrating too seldom can result in out-of-spec product. Consideration should be given to products and sensors that have better long-term stability, lower drift and (if possible) self-monitoring to indicate if a sensor is out of tolerance in between calibration cycles.

Self-monitoring sensors

One of the most recent developments is self-calibrating temperature sensors that have a high-precision reference built into the temperature sensor itself. This is accomplished using a physical fixed point known as the Curie point or Curie temperature. The Curie point is the temperature at which the ferromagnetic properties of a material abruptly change. This change in properties can be detected electronically, which then enables the point at which the Curie temperature is reached to be determined.

The Curie point of a given material is a fixed constant that is specific to all materials of that given type. The sensor uses this value in the form of a reference sensor consisting of such a material. This provides a physical fixed point that can be used as a reference for comparison with the actual RTD temperature sensor. The Curie temperature of the material for batch processes is 118°C. Each time a cooling phase is initiated from a temperature greater than 118°C (eg, from 121°C during the cooling phase of a SIP process), the sensor is calibrated automatically.

When the Curie temperature of 118°C is reached, the reference sensor transmits an electrical signal. At the same time, a measurement is made in parallel via the RTD’s temperature sensor. Comparison between these two values effectively is a calibration that identifies errors in the temperature sensor. If the measured deviation is outside set limits, the device issues an alarm or error message and perhaps also a local visual indication.

Since the calibration data acquired can be sent electronically and can be read using asset management software, it is then also possible to generate an auditable certificate of calibration automatically.

With such a sensor, calibration can now be carried out automatically each time the temperature passes through the Curie point in SIP processes. This reduces the risk of drift-related process errors which could lead to costly lost production. In some cases, it could allow a facility to reduce the frequency of manual calibration intervals, allowing for greater production.

Quicker calibrations

All sensors have to be calibrated eventually. This involves removing the sensor from the process, which takes time and is subject to errors. The biggest problem is that most sensors require disconnecting the wires while removing the sensor and then reconnecting them after calibration. While the procedure is fairly simple, wiring errors can occur. Wiring terminations are problematic in any manufacturing environment. Such a procedure typically takes about 30 minutes.

If the transmitter is improperly rewired or the wiring is damaged, the total calibration time could be increased by 10 to 20 minutes. In some cases, if the damage to the sensor wires is severe enough, it could require replacement of the calibrated temperature sensor.

Another recent development involves RTD sensors with a feature that does not require disconnecting wires when removing the sensor (Figure 4). The technician simply twists the top of the sensor a quarter turn and the sensor can be removed easily. Eliminating the need to disconnect and reconnect wiring cuts calibration time in half. A calibration can be performed in about 15 minutes.

Summary

Temperature is such a critical measurement in bioscience processes that the FDA and other agencies require regular calibration of temperature sensors. Most plants calibrate sensors every six months but sensors can drift out of calibration during that time, potentially ruining expensive batches. New technology makes it possible for RTDs to calibrate themselves at the end of each batch. And, when calibration is needed, another development eliminates the need to disconnect wires, cutting calibration time in half.

Aquamonix integrates flow monitoring for major gold producer

Instrumentation company Aquamonix has installed a flow monitoring solution for a major...

Magnetic flow meters: Verification and calibration within the process

Magnetic flow meters are often the best solution to measure flows of slurries, sludges and all...

Level measurement in water and wastewater lift stations

Condensation, build-up, obstructions and silt can cause difficulties in making reliable level...