Seeing with AI: flexible navigation in dynamic environments with Visual SLAM

Automation solutions based on camera vision and AI models overcome the limitations of existing AMR navigation technologies.

Automated materials handling and transport in logistics and manufacturing centres, as well as in major retail facilities, offers vast benefits, including increased efficiency, profitability, safety and flexibility in terms of labour fluctuations. Nevertheless, the vast majority of tasks and processes that could benefit from mobile robotics are still executed manually. However, the trend in many industries towards mass customisation — in other words, producing smaller lots of greater variety in shorter product lifecycles — is affecting manufacturing as well as warehousing and logistics operations, which calls for increased use of flexible robotics.

This low adoption rate can ultimately be linked to the fact that most mobile robots sold today rely on expensive, legacy fixed-floor installations or 2D laser scanners — devices that can perceive the environment in only a narrow slice, as if looking through a mailbox slit. To know its location and how to navigate to its destination, a vehicle that depends on such outdated technologies requires a structured, static environment and can perform only very simple, precisely defined tasks — the opposite of the way modern warehouses, production plants and big box stores operate.

Automation solutions based on camera vision and powerful AI models overcome these limitations, as they offer a much richer and more intelligent perception of the environment. Automated vehicles equipped with such capabilities can easily navigate dynamic environments, execute complex tasks, and work in unstructured spaces shared with people. This technological progression is needed to bring autonomy to warehouses and to all other industries that depend on manual labour today.

Visual AI for localisation and navigation

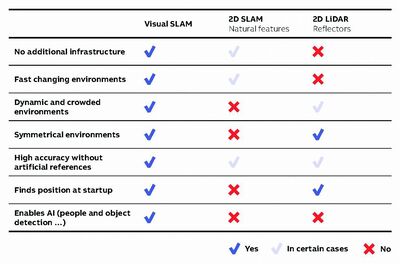

The solution that makes the above-mentioned capabilities possible is called Visual Simultaneous Localisation and Mapping (Visual SLAM). Using cameras, cutting-edge computer vision algorithms and AI models to perceive their surroundings, Visual SLAM-equipped robots build rich 3D maps of the environment to precisely locate themselves within it. Visual SLAM’s advantages are:

- No costly infrastructure installations required; the environment is perceived as it is and in real time.

- Functions in dynamic environments such as warehouses where the layout may change frequently and where people and objects are constantly moving around.

- Extremely robust: perceives visual characteristics of surroundings such as light intensity, contrast and shapes, thus avoiding confusion and allowing mobile systems to work alongside people and moving objects.

- Allows robots to navigate on ramps and uneven floors. The number of mapped environment elements is so high that parts of the environment can be changed without degrading localisation quality.

- Finally, observing the environment in 3D at all heights enables robots of different kinds to collaborate, exchange data and use the same map of the environment to localise, thus forming swarm intelligence.

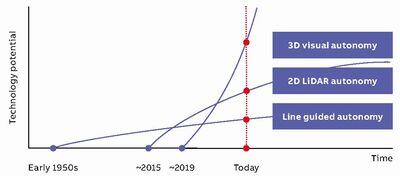

Naturally, these advantages add up to considerable market potential. For instance, according to DHL Logistics Trend Radar1, sales of mobile robots in the logistics industry are projected to grow from US$7.11 billion in 2022 to $21.01 billion by 2029. This growth is facilitated by a technology shift from 2D laser scanner autonomy to 3D Visual SLAM autonomy, which is significantly advancing mobile robots in various applications and boosting their rate of adoption (Figure 1).

A 3D VSLAM system’s hardware consists of an AI-enabled compute unit that runs proprietary algorithms and a set of cameras that perceive the environment. Tight integration of the hardware and software enables optimal performance of the underlying software and AI models.

How vision-based positioning works

3D Visual SLAM software and hardware reliably estimate the 3D position, orientation and velocity of mobile robots. Due to sophisticated computer vision algorithms and AI models, these systems can reliably and very precisely estimate their own position even under the toughest conditions. Built-in AI algorithms never stop learning and with each hour of operation, they can leverage new experiences to update 3D maps of the environment, thus reflecting changes in the operational space and further enhancing the system’s long-term robustness.

Visual SLAM technology serves use cases ranging from single robots working on their own, such as a scrubber-drier robot in a medium-sized grocery store, up to fleets of hundreds of mobile robots of different types in large manufacturing plants. In the latter case, units can intelligently interact and learn from each other by building a joint 3D visual map that leverages AI models and the latest data collected from the environment.

A complete solution for autonomous navigation

3D Visual SLAM systems enable mobile robotics platforms for materials handling, manufacturing, professional cleaning and other service robotics applications with complete navigation and obstacle avoidance capabilities.

One key differentiator is the ability to cope with challenging and unstructured environments, such as busy warehouses or crowded airports. The AI-based perception capabilities make mobile machines completely autonomous, without the need for costly human-in-the-loop interventions.

With on-board Edge AI, robots can plan their best and most efficient paths. They can operate as single entities or, as members of a fleet, can share map and traffic information with each other, accepting orders and commands from third-party fleet management systems (FMS) using modern and accepted standards such as VDA5050.

Fusing information to maximise efficiency and safety

To work effectively in as many environments as possible, the 3D Visual SLAM system must be able to orchestrate up to eight cameras and perform precise synchronisation, automatic gain control and camera exposure control to maximise the information content of images acquired by the cameras, regardless of lighting conditions. Visual AI workflows must execute directly on the unit, with minimal latency or computational burden while benefiting from precisely tuned camera image signal processing (ISP). The associated proprietary hardware design, which addresses the technological challenge of transmitting high-quality synchronised camera imagery over long wiring, allows OEMs to position up to eight cameras anywhere within the chassis of the vehicle, enabling 360° surround view coverage.

AI-powered algorithms also enable robots to precisely estimate their position. This is achieved by extracting discriminative elements from images, such as, for example, the corner of a window, and using these elements to build a representative yet compact 3D model of an environment. To achieve a high level of reliability and enable operations regardless of lighting conditions or perspective, detection and association of discriminative elements is made using AI-based models that run on fast on-board graphic processing units (GPUs).

In order to effectively use the above-mentioned sensor types, robotics systems require accurate calibration. The processes underlying calibration are complex yet essential in achieving both robot positioning and autonomous navigation. Calibration makes it possible to identify internal camera parameters, such as focal length and distortion of lenses, as well as the spatial transformations between different sensors, for instance inertial measurement units, ultrasonic sensors and time-of-flight cameras.

People first

Naturally, all the above-mentioned capabilities not only add up to precise and accurate interpretations of environments but also to a high level of safety for people. AI-based computer vision techniques leverage the rich information content of visual images in order to obtain not only 3D distance information, but also much richer scene understanding, such as the detection of people and prediction of their relative motions. Through the combination of AI-based detection methods with 3D geometrical rules, robots equipped with this technology can detect and track people using cameras all around a vehicle. This crucial scene-based information enables the technology to adapt vehicle behaviour accordingly, for example, by allowing vehicles to swiftly evade static obstacles that are blocking their path but drive more cautiously in the presence of people or come to a complete halt in front of people. Modern AI0-based Visual SLAM technology can use multiple stereo cameras for detecting and tracking people to ensure safe and human-friendly navigation in crowded industrial environments.

Lifelong Visual SLAM

One challenge for any type of positioning system is that environments change over time. This may be due to changing seasons or lighting conditions, changes in factory floor layouts, or simply in warehouse or shop inventories. Without smart algorithms, it is impossible to safely deploy mobile robots in dynamic environments. To master this challenge, AI-based algorithms enable the building and maintaining of always-up-to-date maps of environments. These maps incorporate data from multiple conditions, such as during cloudy and sunny weather, bright and dark lighting situations, and changes in the elements present in an area. These changes are detected automatically and swiftly incorporated into a lifelong map without any intervention.

The path to human-like intelligence

As the number of mobile robots in production centres, warehouses, logistics centres and retail facilities grows, complexity will increase. But to efficiently manage this complexity, mobile robots will need to be even simpler to set up and operate than they are today. Ideally, as they become increasingly capable of harnessing generative AI and large language models, they will be able to set up and optimise their configurations and interactions on their own. At that point, based on a few simple instructions from human operators, they will be able to autonomously explore the available space and plan the paths and flows of materials through that space. This revolutionary shift will increase the overall robustness and throughput of operations — and will likely generate unprecedented additional value.

1. DHL 2024, Indoor Mobile Robots: Trend Overview, <<https://www.dhl.com/au-en/home/innovation-in-logistics/logistics-trend-radar/amr-logistics.html>>

Virtual PLCs — a big step forward!

It looks as though the days of PLCs running a single control task, on dedicated hardware, using a...

Open Process Automation: how and where to start

Open Process Automation presents a transformative opportunity for enterprises seeking to...

Performance without compromise: enhancing manufacturing quality with single-controller solutions

Most factory automation systems today have mutiple control systems that don't communicate...