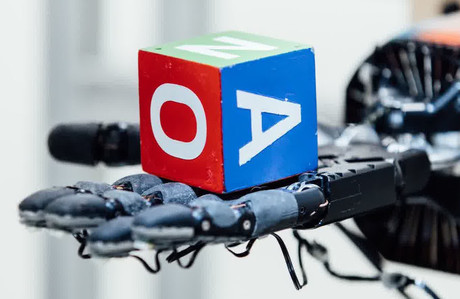

AI teaches dexterity to a robotic hand

Researchers at non-profit AI research company OpenAI have taught an AI system called Dactyl to manipulate a robot hand with human-like dexterity.

The experiment so far has been to teach the hand to rotate a cubic block to present specific faces in specific orientations on demand, using human-like finger manipulations. Unlike a typical industrial robot with seven degrees of freedom of rotation a robot hand has 24, complicating the algorithm enormously.

The way around the problem has been to train the AI system entirely in simulation and transfer its knowledge to reality, adapting to real-world physics.

OpenAI said that although the first humanoid hands were developed decades ago, using them to manipulate objects effectively has been a long-standing challenge in robotic control. Unlike other problems such as locomotion, progress on dexterous manipulation using traditional robotics approaches has been slow, and current techniques remain limited in their ability to manipulate objects in the real world.

By using a simulation method on a distributed computing environment running over more than 6000 CPU cores, the system can learn to spin the block over what would be the equivalent of 100 years of trial and error in the space of roughly 50 hours. The training involves various random factors being introduced to maximise the learning, such as changing the friction between the hand and the block, and even simulating different gravity.

The trick was in making a simulation that can be easily transferred to real-world physics of the robotic hand, which then uses a vision system translating finger and block orientation information.

Dactyl was designed to be able to manipulate arbitrary objects, not just those that have been specially modified to support tracking. Therefore, Dactyl uses regular RGB camera images to estimate the position and orientation of the object. By combining two independent networks, the control network that reorients the object given its pose and the vision network that maps images from cameras to the object’s pose, Dactyl can manipulate an object by seeing it.

In performing the research, OpenAI has learned new things about such problems that weren’t expected. For example, it learned that tactile sensing is not necessary to manipulate real-world objects: its robot receives only the locations of the five fingertips along with the position and orientation of the cube. Although the robot hand has touch sensors on its fingertips, better performance was achieved from using a limited set of sensors that could be modelled effectively in the simulator instead of a rich sensor set with values that were hard to model, such as tactile force sensing.

OpenAI also discovered that randomisations developed for one object generalise to others with similar properties. After developing the system for manipulating a cubic block, they tried to training it with an octagonal prism and discovered that it achieved high performance using only the randomisations designed for the block, while using a sphere was less successful.

Read more about the research on OpenAI’s blog.

IFR predicts the top five global robotics trends for 2026

The International Federation of Robotics has predicted the top five trends for the robotics...

ARM Hub to launch Propel-AIR 2.0 with robotics showcase

Federal Minister for Industry and Science Tim Ayres will officially launch the...

Advantech partners with D3 Embedded on AMR sensing and computing

Advantech has announced a partnership with D3 Embedded to offer an integrated sensing and compute...