Leveraging virtualisation: achieving higher business continuity within industrial facilities

By Jim Frider, Product Marketing Manager, Invensys Operations Management

Wednesday, 07 November, 2012

Virtualisation offers the promise of lower IT costs and higher levels of business continuity for industrial companies. This article provides a basic introduction to virtualisation and how it can specifically benefit industrial companies. High availability and disaster recovery scenarios are presented along with expected system performance targets for system downtime after an event or system failure.

Today, many of the world’s top companies are using software virtualisation technology to deliver significant cost savings, improved efficiency, greater agility, enhanced system availability and improved disaster recovery capabilities.

The typical industrial facility, including manufacturing plants, utilities and processing companies, has many important software applications that can be virtualised. Software like HMI applications, process data historians and manufacturing execution systems (MES) along with other analytical and reporting applications can all be virtualised.

What is virtualisation?

In simple terms, virtualisation is the creation and employment of a ‘virtual’ (software emulated) version of an ‘actual’ version of something. Broadly speaking, virtualisation can apply to physical devices, operating systems, network resources, device drivers or any actual thing for which a virtual equivalent can be substituted. Virtualisation is a form of software abstraction, which allows users to manipulate the virtual versions of things in ways which would be impossible for their physical counterparts. It is this fundamental ability to manipulate the virtual versions of things that provides the power and flexibility to derive so many benefits from the use of virtualisation technology.

Hardware virtualisation

Hardware virtualisation employs software technology, like that offered by Microsoft and VMware, to transform or ‘virtualise’ a computer to create a virtual computer that can run its own operating system and applications just like a physical computer. With virtualisation, several applications and their required operating systems can run safely at the same time on a single physical computer with each having access to the resources it needs when it needs them. Today, this is all possible with commercial off-the-shelf computer hardware and operating systems.

At the heart of the virtualisation process is a software component called the ‘hypervisor’, a term first coined during the early IBM mainframe days. There are two general categories of hypervisor. Type 1 hypervisors run directly on the physical host hardware and manage ‘guests’ or virtual machines so their operating systems can run concurrently on the physical host hardware. This is achieved by intercepting instructions from the guest operating systems and resolving them so that the guests function properly in their virtualised environment. VMware ESXi and Microsoft Hyper-V are examples of type 1 hypervisors. Conversely, type 2 hypervisors run on top of the host operating system and are not as commonly used with PC-based systems.

Desktop virtualisation

Besides the virtualisation of industrial applications, another area of virtualisation that is gaining momentum is the virtual desktop interface (VDI). Commonly used desktop applications and their required system resources are contained within a VDI and accessed from a network server. As none of the software runs on the user’s local machine, less costly computer equipment can be used. IT support responsibilities are reduced and the burden of data backup can be removed from the user. Besides the many IT-related benefits, VDI users have the added benefit of being able to access their virtualised desktop from a variety of other devices including their smartphone, tablet or home computer.

Virtualisation platforms

Many companies are now using virtualisation at the enterprise level to create entire virtualised computing infrastructures that allow IT departments to automatically deploy computing resources when and where they need them, with a minimum of manual IT intervention. Cloud-based implementations promise even more flexibility and economy by outsourcing computing resources to other vendors such as Microsoft with its Azure platform or Amazon and others. Virtualisation platforms are already providing businesses of all kinds with tremendous IT cost savings and improved infrastructure utilisation.

History of virtualisation

The high cost of mainframe computers in the 1960s led IBM engineers to develop ways to improve the efficiency of their equipment. By logically partitioning their machines they were able to offer multitasking. For the first time multiple applications could run at the same time - significantly improving utilisation.

As low-cost, PC-based client-server architectures became the computing standard in the 1980s and 1990s the focus shifted to exploiting this new architecture by delivering more computing resources and applications to more users. Virtualisation was not a technology considered by most IT departments. However, by the late 1990s the sheer number of servers and desktop machines reached the point where virtualisation technology became viable as a cost reduction strategy.

In 1999, VMware released its first virtualisation products and by 2005 processors became available that directly supported virtualisation. Today, a majority of large corporations are using virtualisation to reduce their IT costs.

Phases of adoption

Industrial companies often adopt virtualisation technology in two phases; we will call them Virtualisation 1.0 and Virtualisation 2.0.

Virtualisation 1.0

In this first phase the emphasis is on IT and how virtualisation can be used to lower IT costs, improve IT staff efficiency and improve hardware utilisation. IT works on creating virtual machines of key applications and consolidating them on corporate servers. This first phase of virtualisation allows companies to reduce their hardware costs, improve their server utilisation and improve their ability to operate and maintain a wide range of software applications. IT service improves because IT takes on the responsibility for managing applications which have been reluctantly managed by the groups directly using the applications, like operations or maintenance. HMI applications, process data historians and web report servers are examples.

Virtualisation 2.0

During the second phase of virtualisation, companies often deploy multiple instances of the same application to ensure business continuity or to improve application performance via load balancing.

For business continuity, multiple instances of an application, and the data servers furnishing it with data, are run simultaneously so that if the primary application or data server fails, the secondary application instance and data servers automatically take over and downtime is minimised.

If simultaneous application instances are run on servers located in different physical locations, disaster recovery is much easier.

Some applications can be run in load balancing mode when the processing load is shared across two or more virtual machines, improving the overall performance of the application without having to invest in a second physical server.

Benefits to IT, operations and engineering

Virtualisation of industrial applications can benefit many groups within a business. Let’s explore the benefits gained from virtualisation based on business function, namely IT, operations and engineering.

IT benefits

There are three main areas of benefit from virtualisation for the IT organisation:

- Cost reduction: Through consolidation of physical servers, virtualisation allows much greater utilisation of server hardware so fewer servers are required and a reduction in the energy is consumed in data centres. Virtualisation also extends the life cycle of applications because they are insulated from hardware or software technology shifts, generating more ROI for the business.

- Management: Fewer hardware upgrades are required over time, reducing capital expenditure requirements and IT workload, and the use of virtual machines facilitates centralised management of applications since fewer applications reside on local servers. Fast deployments can be achieved as self-contained virtual machines can be easily deployed without having to install the required operating system, prerequisite software or the application itself on a dedicated server. Corporate standard libraries of virtualised applications allow the creation of standardised application configurations that deliver maximum value to the business.

- Support: The implementation of virtual desktops allows much more efficient management of desktop machines, and since application software doesn’t reside on each desktop machine, new applications can be rolled out to a large number of users much faster. This also allows for more effective backup procedures, since all data stays on corporate or cloud servers, effectively eliminating data loss.

Operations benefits

For operations, business continuity can be achieved because multiple instances of the same virtual machine can run in an automatic failover mode. If the failover instance is located in a separate physical location, disaster recovery is also enhanced. The reduction in physical server footprint leading to a higher return on capital and a reduction in the mean time to recover (MTTR), lowering operational cost, and thin client PCs on the plant floor running virtual desktops reduce the support burden.

Engineering benefits

For engineering, development costs are reduced since fewer physical servers are required with fewer modifications needing to be made over time to keep applications running properly. Virtualised instances of object-based applications, like WonderWare ArchestrA System Platform, can be developed and deployed faster than conventional applications; and since multiple instances of a virtual machine can run at the same time, developers can work in a more collaborative and efficient manner.

Levels of availability

When we speak about a ‘high availability’ solution we typically mean a solution with redundant software and/or hardware components that ‘fail over’ to an unaffected system to enable a predefined level of availability over a specific time frame.

Levels of availability are detailed in Table 1.

| Availability | Description | Expected failover | Details |

| Level 0 | No redundancy | None | No redundancy built into the system architecture. |

| Level 1 | Cold standby | Availability: 99% Downtime: 4 days/yr |

Primary and secondary systems, manual failover to secondary system, data periodically synchronised. |

| Level 2 | High availability | Availability: 99.9% Downtime: 8 h/yr |

Virtualisation used for primary and secondary systems. Disaster recovery via virtual machine systems located in geographically separate locations. |

| Level 3 | Hot redundancy | Availability: 99.99% Downtime: ~52 min/yr |

Full synchronisation of primary and secondary systems. |

| Level 4 | Fault tolerant | Availability: 99.999% Downtime: <5 min/yr |

Fault tolerant hardware, lock step failover to redundant application instance. |

Implementation scenarios

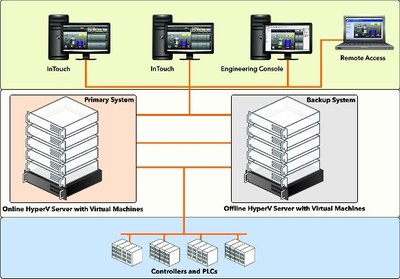

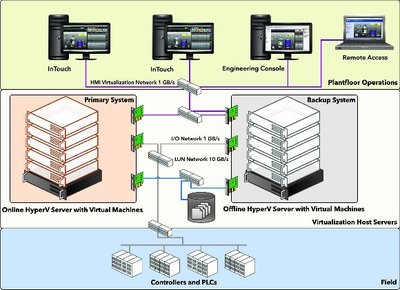

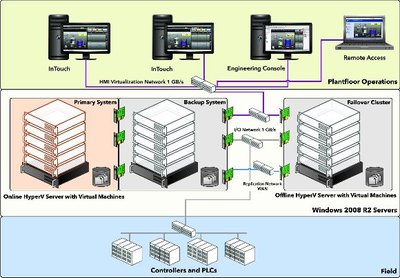

High-availability implementations

It is possible to achieve Level 2 or even Level 3 availability for applications like HMI and supervisory control using two, preferably identical, hardware servers (bought at the same time) each loaded with an identical virtual machine. Identical hardware and virtualised applications help ensure that if a failover occurs there are no deviations in system performance resulting from differences in hardware or software.

To achieve Level 4 availability usually requires an investment in fault tolerant hardware, including servers, disk systems, power supplies and network cards. At this availability level, downtime is reduced to very low levels; less than 5 minutes per year, for example.

The performance of any high availability (HA) solution is dependent on the quality and implementation of the HA architecture.

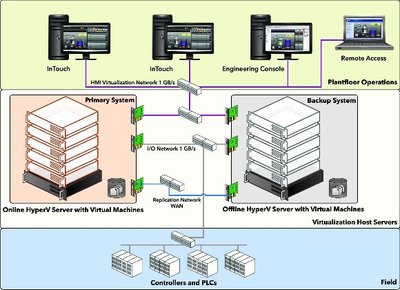

Disaster recovery implementations

With the risk of natural disasters and a growing threat from terrorism, many industrial companies are exploring ways to recover faster from possible catastrophic disasters. Virtualisation provides a realistic and practical way to recover critical applications and their associated data more quickly and economically. Like high-availability implementations, if identical physical servers and identical virtual machines are run together in failover mode, but located in different geographic locations, a Level 2 or Level 3 availability can be achieved.

High availability combined with disaster recovery

The goal of a high availability and disaster recovery (HADR) solution is to provide a mechanism to automatically shift data processing and retrieval for a critical industrial application to a standby system; for ‘normal’ failure scenarios to a standby system in the same facility or, in the event of catastrophic events, to a standby system located in a different geographic location.

By combining HADR architectures, industrial applications can be made highly available and able to quickly recover from a disaster.

Summary

Today’s virtualisation software solutions from Microsoft, VMware and other vendors provide a cost-effective way to improve the availability and disaster recovery capabilities of critical, industrial applications such as HMI and supervisory systems. Standard, off-the-shelf computer hardware and software can be used to lower costs and reduce the level of expertise needed to implement these types of solutions. Any manufacturer, processor or utility needs to evaluate these new approaches so that they too can mitigate risks and ensure continuity at their facilities.

Edge computing for mining and metals applications

Digitalisation through edge computing can unearth new value in mining and metals processing.

COTS hardware in oil well production and monitoring

No longer is a laptop with a four-port switch a viable engineering toolset for compliance and...

The evolution of mobile field tools

The use of mobile tools to facilitate ease in accessing field device information and plant...